Google Summer of Code Proposals for Elastic

Elastic is participating in Google Summer of Code (GSoC) for the second time in 2020 after our premiere in 2018. I’m our organization admin again, which mostly means shepherding. While Greg Thompson, Chandler Prall, and Dave Snider do the actual (mentoring) work.

This post is a recap of the proposal period as a datapoint for

- myself in case we participate again (I have little recollection of our 2018 stats),

- other organizations for comparison, and

- students to get a better understanding of the competitive situation they are facing.

According to the GSoC mailings, this has been another record year:

The student submission period is now over — and the numbers were much higher than ever before — over 51,000 students registered for the program this year (a 65% increase from the previous high)! 6,335 students submitted their final proposals and applications for you all to review over these next couple of weeks (that’s a 13% increase over last year).

Requirements #

From my previous experiences — student in 2007, mentor in 2012, and organization admin in 2018 — we have adopted rather strict requirements:

- Outline of project deliveries.

- Schedule in one-week intervals and a special section for any time not available during GSoC like exams or trips.

- A pull request to the project; it doesn’t have to be merged (yet), but we want to see some code.

Especially the last point is not trivial, but also valuable. It shows that students can work with the necessary tools like Git, build, and test tools. We see how well we can work together while iterating on the pull request. And there is an immediate benefit to the project, making it more valuable for everyone than a coding exercise — students can show real contributions, the project improves, and the mentors don’t spend time checking results that are going to /dev/null right away.

For the projects we suggested three different project ideas and this is also the absolute maximum we will be able to mentor; though we might even reduce it to two of those.

Statistics #

How did it turn out? Very well. It will be hard to pick the students, which is both a great and unfortunate problem to have.

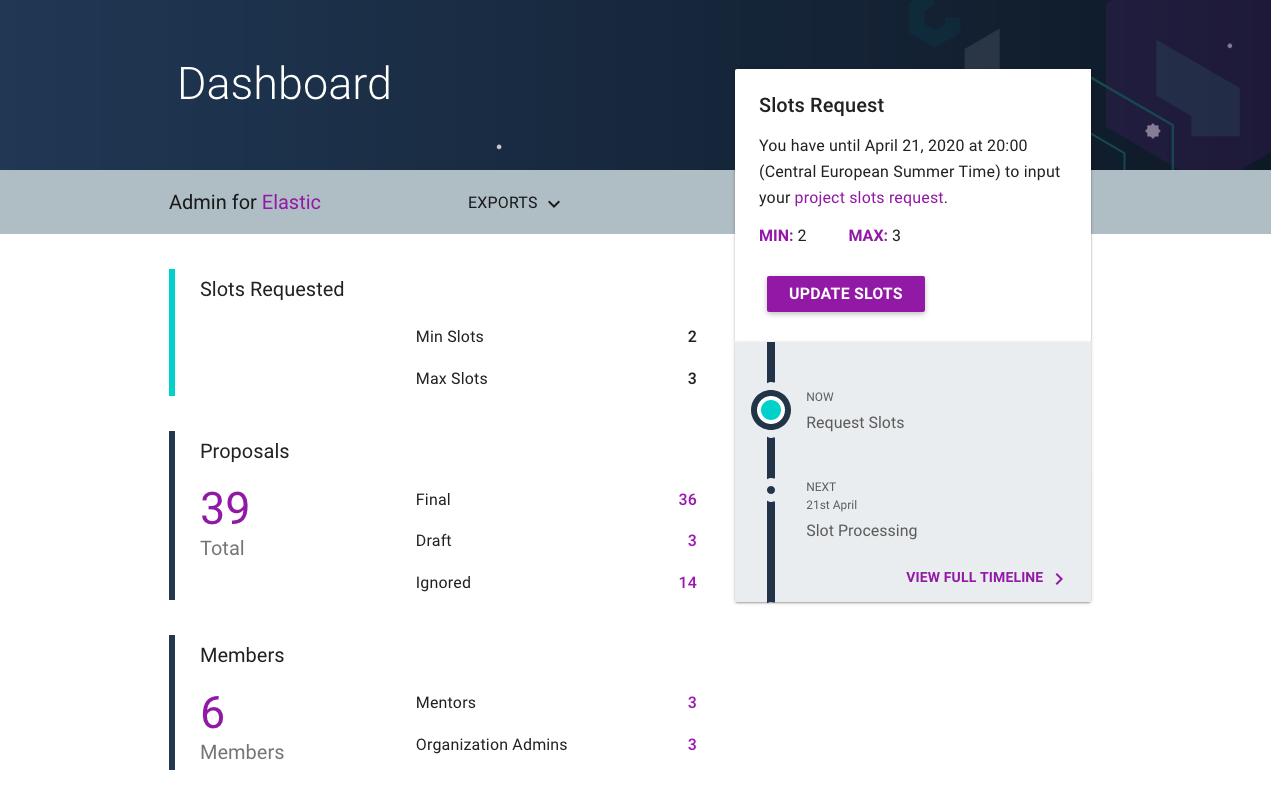

I’ve starred the proposals of those who have submitted a PR and ignored any submissions, which didn’t follow our requirements — CVs without a project idea, proposals for out-of-scope projects, or applications built on top of our stack. The GSoC dashboard already gives a rough overview of the results:

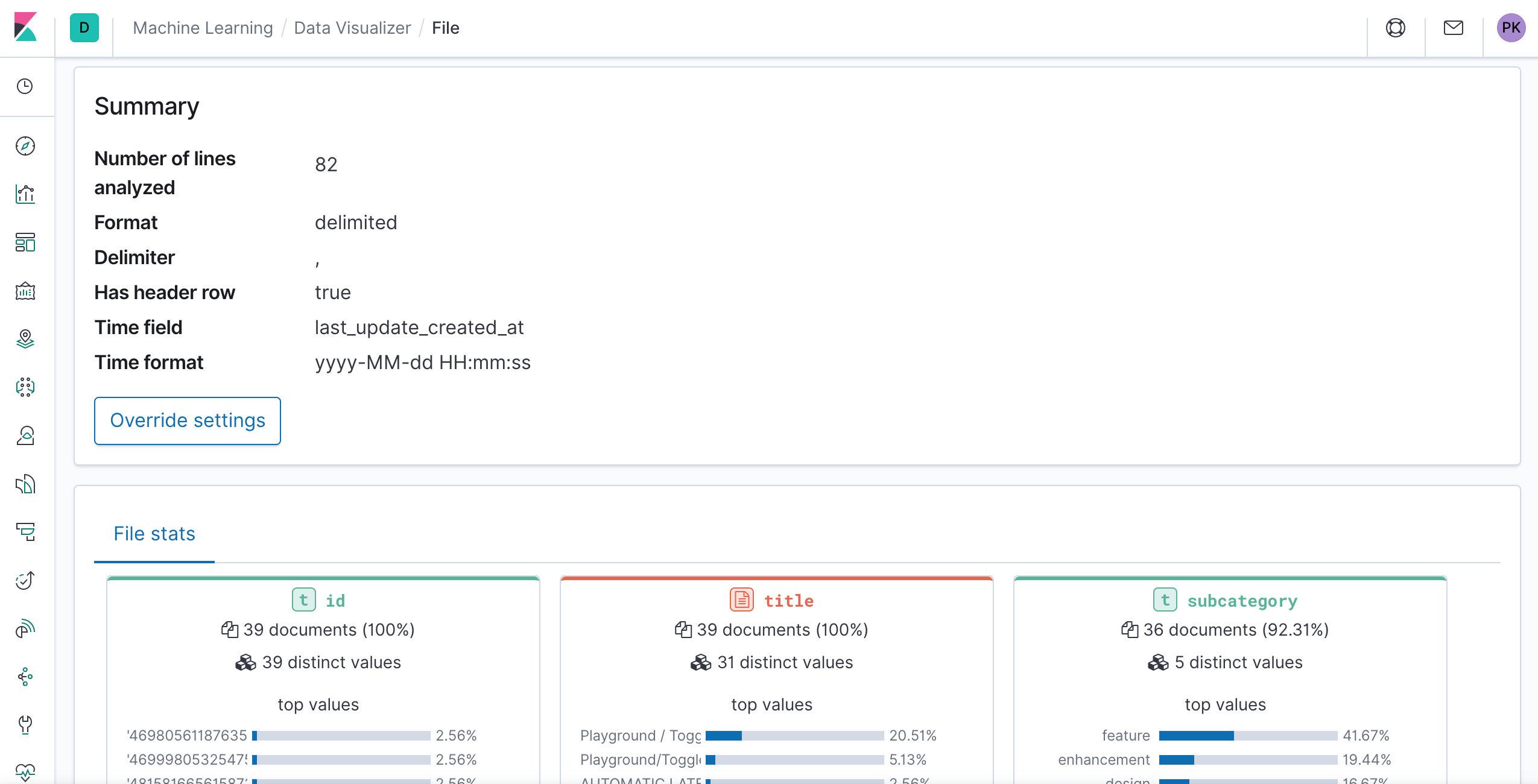

But you can visualize this better by exporting the data in CSV and importing it into Kibana. Like the French would say, «drink your own champagne.» Which sounds way nicer than 🐶🍽.

There is only one minor change needed in the CSV: Lowercase True and False so that they will be picked up as boolean values and not keyword. Kibana’s Data Visualizer can then do the work of uploading the file, generating the mapping, importing it, and creating the index pattern.

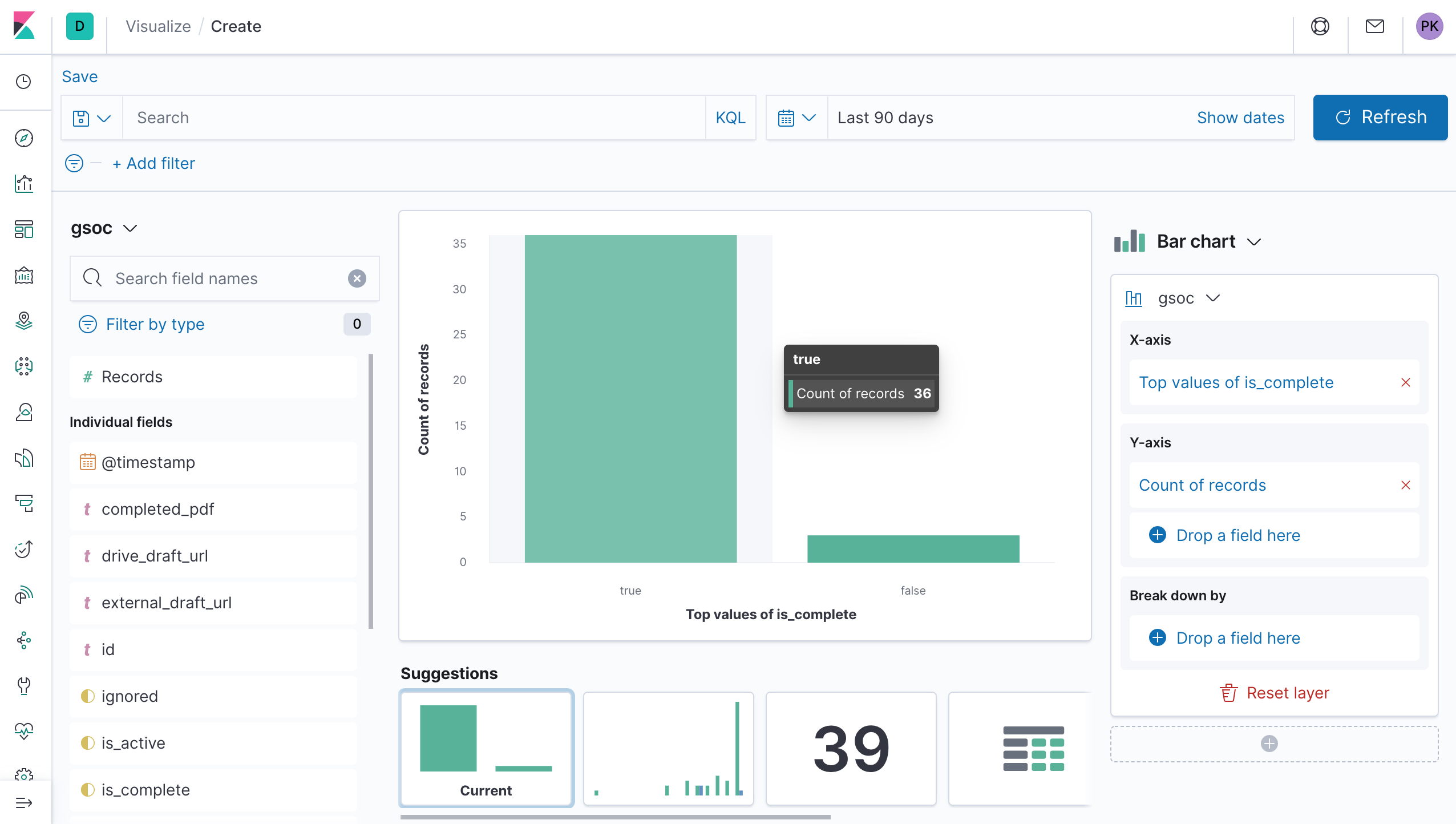

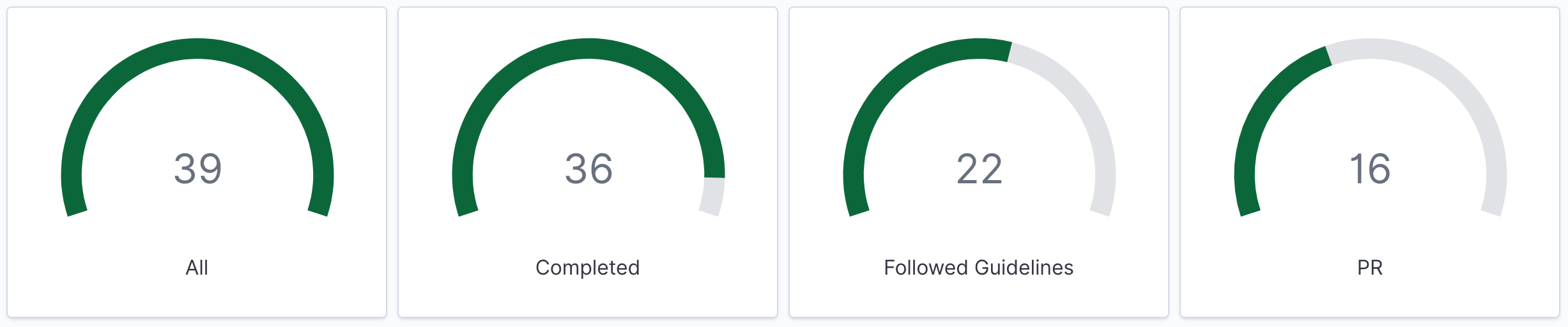

With Kibana Lens I can quickly look at the is_complete, ignored, and starred statistics:

Which reveal:

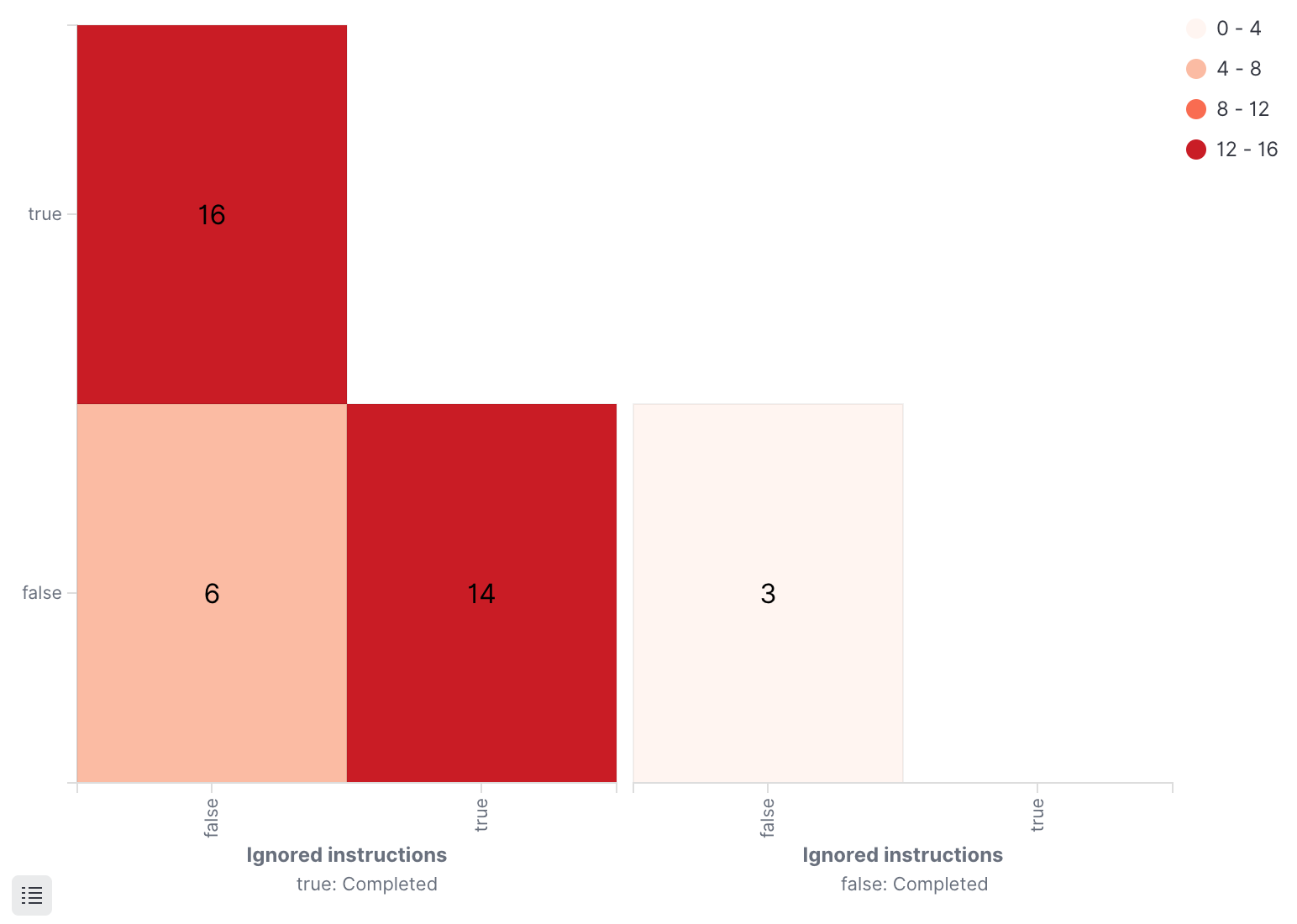

- 39 started proposals by 33 different students.

- 3 of 39 didn’t complete the proposal.

- 14 of the remaining 36 didn’t follow the application guidelines.

- But 16 of the remaining 22 submitted a PR.

If you prefer a visual representation as a dashboard:

And with a heatmap, I can combine all three attributes:

Conclusion #

So far, so good. Now we need to pick the right students and “just” do the actual work. But the numbers look promising so far.

And there is one great outcome already: In the EUI contributions of February and March I can spot multiple GSoC students far up in the list.

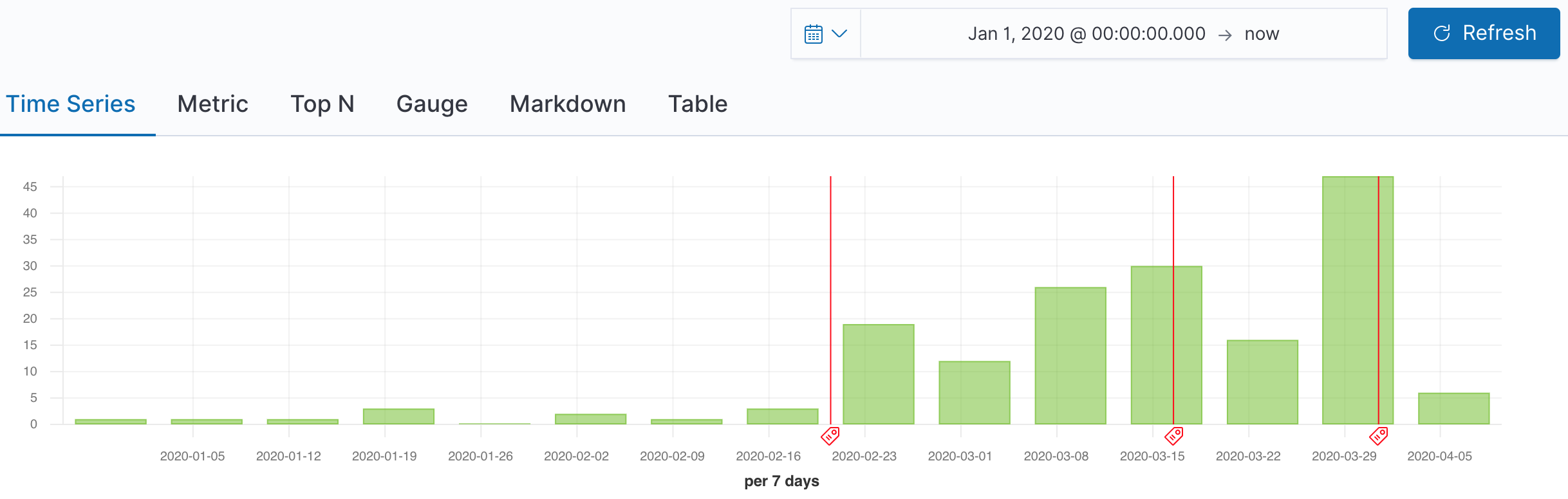

Or to show it another way: These are the PRs from not author_association.keyword : "MEMBER", so the ones excluding Elasticians. And I’ve annotated the GSoC timeline with:

- List of accepted mentoring organizations published.

- Student application period begins.

- Student application deadline.

You can see the “GSoC effect.”